Wellcome to Sergey Kirgizov's library,

You can find here all papers liked or uploaded by Sergey Kirgizovtogether with brief user bio and description of her/his academic activity.

Sergey's personsal site : http://kirgizov.link.

☆

3

Comments:

##To take away:##

- This paper is about a slight improvement of the $k$-clique Algorithm of Chiba and Nishizeki

- The performance in practice on sparse graphs is impressive

- The parallelization is non-trivial and the speedup is nearly optimal up to 40 threads

- Authors generate a stream of k-cliques to compute "compact" subgraphs

- A parallel C code is available here: https://github.com/maxdan94/kClist

##Suggestions to extend this work:##

- Can we find a node ordering better than the core ordering?

- Generate a stream of $k$-cliques to compute other quantities?

- Generalize the algorithm to $k$-motifs?

- Parallelization on higher order $k$-cliques if more threads are available?

Slides of the talk: https://drive.google.com/file/d/15MVJ2TzkdsHcyF6tE4VeYQqH8bU0kzDE/view

> Another extension: can we guarantee a given order on the output stream? that it is uniformly random, for instance?

I think that this is a very interesting and open question! I have tried to generate a stream of k-cliques such that the order is random by modifying the kClist algorithm. But I was not able to do so.

I wanted to do that in order to optimize a function depending on the k-cliques using stochastic gradient descent. I have found that using a random ordering lead to a faster convergence than using the order the k-cliques are outputed by the kClist algorithm.

Here is what I've tried:

- If you have enough RAM, then you can of course store all k-cliques and do a [random permutation](https://en.wikipedia.org/wiki/Fisher%E2%80%93Yates_shuffle). But, since you mention "steam", I do not think that this is the case for you.

- You can use another node-ordering (different from the core-ordering) to form the DAG. You can use, for instance, a random node ordering. You may lose the theoretical upperbound on the running time, but you will see that, in practice, the algorithm is still very fast (say twice slower than with the core ordering (but this depends on the input graph and k, you may also find some settings where it is actually faster than with the core ordering)). The order the k-cliques are stream will then change, but it will not be uniform at random.

- Once you have formed the DAG using the node ordering (core ordering or any other ordering), you do not need to process the nodes in that same order. You can use another random ordering for that. It will add some randomness in the stream, but the order will still not be uniform at random.

Please let me know if you have any better ideas.

> Another extension: can we guarantee a given order on the output stream? that it is uniformly random, for instance?

One possible way to do is using a buffer, however, it remains non uniform. A buffer of size n/100 can be filled at first using the first n/100 output. Afterwards, one K-clique is randomly selected to be « outputted » and replaced with a new k-clique. The larger the buffer, the closer the ouput will be to a uniformly random output.

☆

1

Comments:

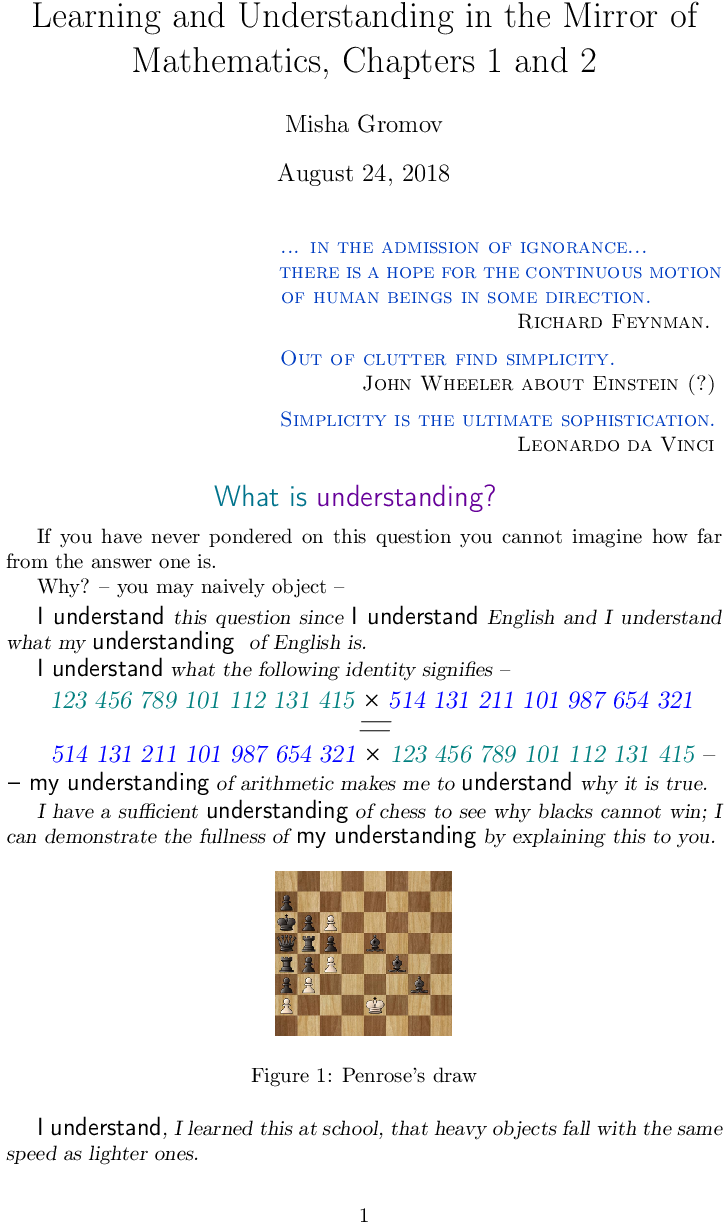

This is not a usual scientific paper, not a metaphysical tractatus either.

It is accessible for for people with different backgrounds.

Author tries to convince us that "the core of the human mind is simple and its structure

is comprehensible". If I understand correctly, he believes that we (humanity or an individual?) will eventually develop a mathematical theory allowing us to understand our own understanding.

Nevertheless, we should surmount a strange barrier of unclear shape and unknown size to achieve the goal.

The power of our imagination could help... Who knows ?

Some phrases are very remarkable, for example :

* *In reality, no matter how hard some mathematicians try to achieve the contrary,

subexponential time suffices for deciphering their papers.*

* *For instance, an intelligent human and an equally intelligent gigantic squid

may not agree on which of the above three blobs are abstract and which are

concrete.*

* *To see what iterations of self-references do, try it with "the meaning of". The first iteration "meaning of meaning" tells you what the (distributive) meaning really is. But try it two more times, and what come out of it,

"meaning of meaning of meaning of meaning" strikes you as something meaningless.*

The mind experiment from the third point suggests that our brain uses some type of hash function dealing with self-referential iterations. The special hash function that have the following property:

it is possible to take a inverse of such hash, but it takes some time;

inversion of a double hash becomes more complicated; its virtually impossible

to inverse a triple-hash application.

If "hash inversion" and "understanding" are linked in some way, this could lead to something interesting...

The hash function in this case works like a lossy compression algorithm.

Comments: